Introduction

This chapter explains what has drawn me into the world of iPad music making and what is it that is interesting in it to me. Why am I doing a research like this, what is the background for it, and what questions am I trying to answer with the research? The research leans heavily on my own practice as an iPad musician; this chapter also explains briefly what is the practice that’s included in the research process.

Research interest

This section explains my motives for doing this research. What makes iPad an interesting study object? What are its novelties in music making process, especially in using it in live performance?

iPad is not a musical instrument by design, but there are a lot of applications for the iPad which are versatile musical instruments. In this research I want to explore and exploit the way iPad can be used in live situations and show interesting uses for the iPad as a musical instrument. In addition, I want to use live visuals and project to the audience what’s happening on stage and on touch screen. In order to fully concentrate on the capabilities of the iPad, I’m building a solo live performance.

I’ve been using iPad as a musical instrument since 2012. I’ve kept it as an equal instrument along with my bass, guitar and laptop. I started with iPad in fact, for no special reason. However, compared to other tablets, iPad has the largest selection of musical apps available at the moment. Furthermore, if the operating system of an iPad, iOS, is compared with Android, it performs with shorter latency[1] time (Szanto & Vlaskovits 2015). This may be the main reason why iPad works better as a musical instrument than other tablets, at least when compared to Android devices.

The research focuses on live situations. iPad works as part of music making process in other phases, too: it can be used as a portable digital recording studio or as a handy digital notepad when composing. However, iPad is particularly interesting as a live instrument. Just plug in your iPad and you’re ready for the gig. The possibilities for human-computer interaction are more easily at hand with iPad than with regular laptop computers. iPad has a fairly big touch screen for playing, built-in sensors like accelerometer and gyroscope for controlling the outcoming sound and protocols like MIDI and bluetooth to communicate with other players and musical instruments. I focus on iPad in a live situation and exclude recording and practicing phases from the research.

Computers have been used for making music for more than 50 years (Elsea 1996). In principle iPad is only a portable computer, and in modern computing standards not a very powerful one. Many things that can be done with iPad can be done faster and more easily with a laptop. On the other hand, many things can be done with the iPad that would be very difficult with a laptop. That’s interesting to me.

The first chapter is introduction. In the second chapter I lay out the methods and define myself as an artist. The third chapter is theoretical part that locates the practice into theoretical discourse in fields of Computer Music and NIME[2]. Practice part begins in the fourth chapter with describing different elements for building a musical performance using an iPad. The practice part continues in chapter five with listing interesting iPad apps and reflecting them on my artistic perspective. I select the apps based on both how I as an artist regard them interesting and how they can be seen as interesting instruments because they exist on an iPad, and not for example as desktop applications. Those apps contain the apps that I use for the compositions. I analyze what’s special with the selected apps. In chapter six I go through building the setup for live performance, both audio and visuals. Chapter seven is concusion. I, as an artist-researcher, go through the composition process and the outcome. Chapter eight discusses next steps for the research.

Background

This section explains how my previous experience supports the idea of doing this research as artistic research.

I’m in a duo called Haruspex where my main instrument is iPad. I’ve used iPad as an instrument in various gigs but I’ve never really analysed what I’ve been doing. There isn’t much prior research on the topic of using iPad as a live instrument. Now in this research I would like to break down the use of iPad as a live instrument to bits that can be discussed further.

Along with the gigs with Haruspex, one of the most important gigs was a concert with iPad Orchestra in late 2014. iPad Orchestra was an ensemble formed after a year-long course in Sibelius Academy in 2013–2014. The subject of the course was to discuss and explore the possibilities of a tablet computer as musical instrument. I took part in the course and got even more interested in the subject. We ended up using iPads instead of some other tablets because it had the best selection of musical apps available at the time. The course culminated in a final concert where we played iPads as a band of 6 players and also together with other more traditional instruments[3]. The concert was received well by the audience. There were many interested persons coming to us after the concert and asking about the apps that we were using.

There’s existing research about certain iPad app as a musical instrument (Trump & Bullock 2014), a book about iPad as a musical instrument (Johnston 2015), and I think there will be more content about the subject available in the near future. But currently this kind of literature is not very abundant. The whole field of mobile technology is developing so quickly that it’s hard to keep track what’s happening and what are the existing possibilities. The iPad book together with electronic music guides and numerous how-to-use-specific-app tutorials on YouTube form a nice background for the topic for anyone starting to make music with iPad. It’s important to set the research background somewhere and this is my attempt to do so. I will do it as an artistic research, using my own art as basis.

Brown and Sorensen (2008, p. 156) argue that a practitioner is not equivalent to a researcher and researchers shouldn’t believe they can automatically be practitioners. I somewhat disagree. Of course, it’s not automatic that a practitioner is a good researcher and vice versa. Klein (2010, p. 1) claims that many practitioners already do a lot of research when preparing the practice. I believe that this knowledge is transferable to research. It’s essential to find a suitable method to research the practice (Hannula et al, 2003, p. 13) . I do agree with Brown and Sorensen (2008, p. 156) that it requires certain capability in both domains. I use my experience of using iPad as a live instrument as a starting point to line out the actual practice part of this research. Additionally, I wanted the research to make me compose more music and analyze what I am doing – and by making me build a whole solo live performance around iPad it surely does this.

Research questions

This section lays out the research questions. One one them is the most important, other three just follow along.

Sometimes iPad apps drive me mad; sometimes I love them. Usually I feel that I spend too little time with them to truly explore all of their possibilities. I think that there are certain things that make the iPad a really nice live instrument: it can be used as an electronic music device with the possibility to give input in non-discrete manner, a bit like with the traditional instruments. My personal interest lies in the intersection of those two worlds. I want to find out how a live set can be built using iPad as a centre point.

Main question:

How to build an interesting and musically versatile solo performance with iPad?

Subquestions:

How does the iPad function as a musical instrument in a live situation?

What is an iPad good for in musical live performance?

What is an iPad not good for and what are its limitations in musical live performance?

Additionally, I want to find new ways to enhance my creativity. I believe that the iPad lies in a field that has a lot to give, already now and especially in the future.

I anchor the research in computer music and research of NIME. I use my own practice and art as basis for the research. The method is called Practice as Research. Along the research process I experiment with different interesting apps and keep a diary at the same time. I compose songs for the iPad – for myself to perform, using only iPad – and I explain how the compositions are constructed. Then, as a final result I shoot videos of the performances. It’s also a good way to make sure I’m actually able to perform the compositions live.

I go through the compositions in the light of NIME and computer music theories by answering questions like “do I make use of the methods of computer music?”, “does the iPad as live instrument enable expressivity in live performance?” and most importantly, “what are the building blocks and methods of building the solo live performance with iPad?”

Practice

This section gives an overall view of the practice part of the research.

This research is an artistic research which locates itself in theory in the literature review in chapter three. The research method is artistic research. I am the artist who produces the art that is used as research material.

The actual practice part of the research consists of four compositions for the iPad. The performance is intended to be performed live but in the scope of this research I shoot videos of the performances in a studio. However, I want the compositions to require practice. It won’t be just pressing play buttons. By practicing I’m able to determine what are the things that an iPad is good for and not so good for, and what are its limitations. I want to see how much presetting needs to be done before the gig and to what extent the different apps and ways of playing leave room for spontaneity and improvisation, both positive qualities for me.

When readers review the results of this research they should take into consideration my artistic ideas and tendencies that are described in chapter two. Zappi and McPherson (2014) have shown that already a simple instrument can lead to a wide variety of musical styles. This research is a subjective interpretation of what the selected apps can do, but however, it can provide insight and inspiration for others, too. I will aim at giving results that can be applied in many ways.

The goal of this research is not to compare iPad with other ways of doing similar things. It’s not in the core of this research. For me as an artist it’s important and interesting to find out how iPad enhances the creative thinking and creativity of a musician. In many parts of the research I rely on my own intuition in finding out what I feel new and interesting. I’ve been using iPad in live gigs without analysing the performance. I know what has been working for me and what has been difficult, but now I’d like to dig a bit deeper and find out why certain things work and other things don’t.

Method

In an artistic research, researcher tries to be in a relationship with the subject (Hannula et al 2003, p. 46). It’s important to find out what are the expectations, needs, interests and fears towards the research subject (ibid.). I interpret this guidance in the way that it’s important to write down the feelings towards the topic, research and the practice. The goal of this chapter is to describe the general qualities of an artistic research, the research process of the research and my artistic tendencies.

Artistic research

The point of this section is to empower me to do the research as I am intending to do it and describe on high level how an artistic research should be carried out.

Hannula et al (2003, p. 9) write about artistic research: “Results of the research, the outcome, are a surprise for the researcher(s).” This idea inspired me to do my master’s thesis as an artistic research. I want to explore the unknown. I want to do something experimental. There’s something similar in what Hélène Cixous (2008, pp. 145-147) writes: “So, you’re tracing a secret that is escaping. You’re approaching the secret and it escapes. Painting or writing takes place when you’re tracing a secret, as a matter of fact, painting or writing is tracing a secret.“ I feel that doing artistic research is similar to what Cixous writes – I want to trace a secret. According to Barrett and Bolt (2007, p. 5) artistic research provides a more profound model of learning – “one that not only incorporates the acquisition of knowledge pre-determined by the [researcher’s] curriculum – but also involves the revealing or production of new knowledge not anticipated by the curriculum. “ (ibid.)

According to Hannula et al (2003, pp. 13–14) artistic research should only be done if the research has an impact on the art, and the art has impact on the research. Thus the research has the potential to shine both artistic and scientific light. The whole research process should be tightly coupled with the art. If the research has no impact in the research part, then Hannula et al (2003, pp. 13–14) claim that the artistic part is separate from the research and it doesn’t make much sense for the researcher to be the artist of the research.

I base my research on the ideas and approach of Hannula et al (2003). They formed the basis of artistic research in Finland. Hannula et al (2003, p. 16) highlight that the artistic side and scientific side of the research should interact with each other, throughout the research. Only then the research can be critically reflected by the artist-researcher. An important question is (Hannula et al 2003, pp. 16-17): how does the experience of the artist guide the formation of theoretical knowledge – and vice versa: how does the reading, thinking and theoretical discussion guide the artistic experience? It’s important that the artist-researcher explicitly describes all the hermeneutical loops, re-evaluations of the topic and choices of discourse that the artistic experience leads to (ibid. p. 17).

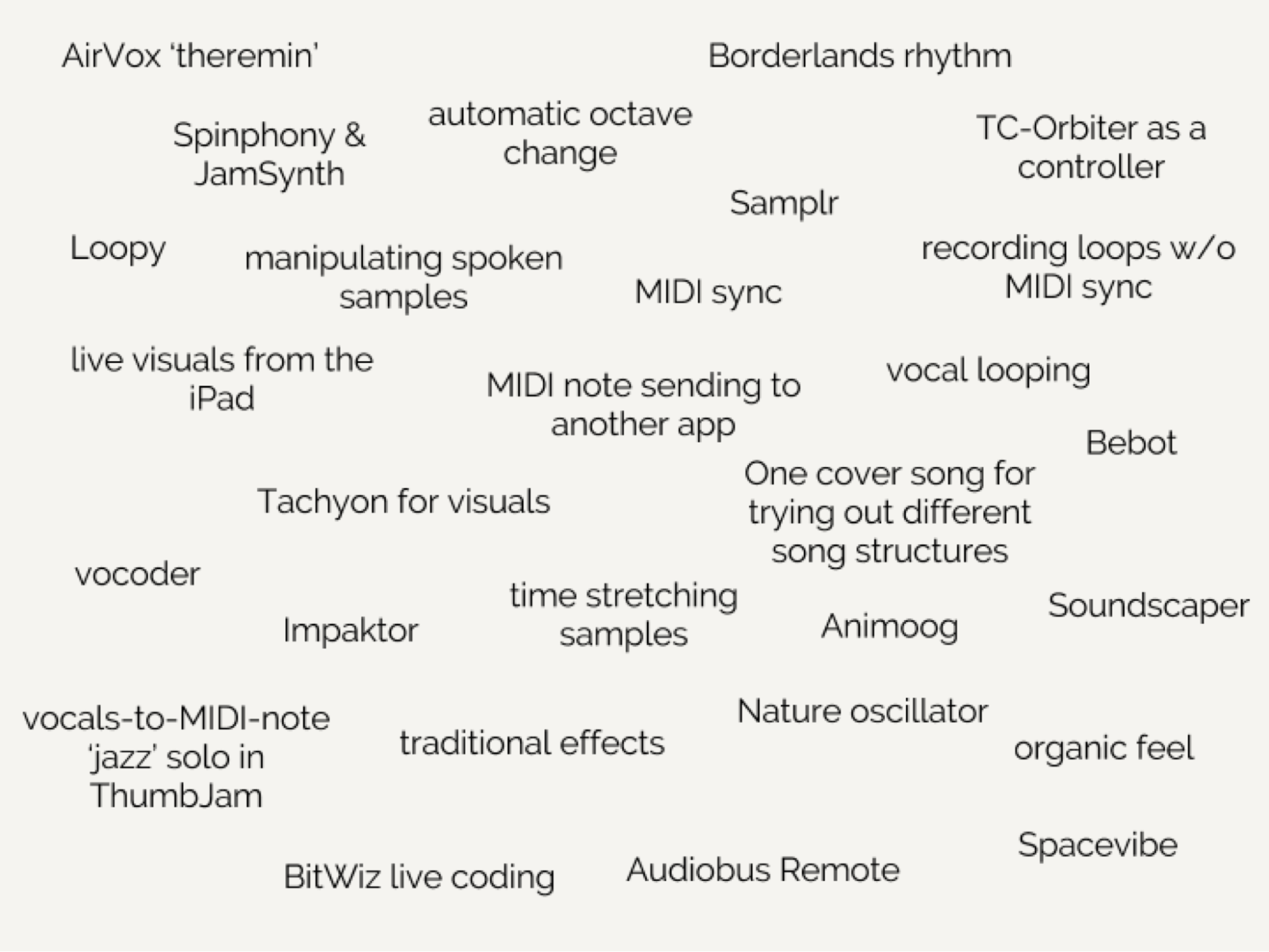

In my case the normal research cycle begins with me reading internet forums on musical iPad apps, and getting inspired by a new app, or a feature that someone has discovered. After discovering something interesting I start jamming, and after the jamming session I write a few sentences about the jamming session, answering questions like ‘what did I find interesting in the session?’, what problems did I have or what settings do I need to do in order to do it again. Then, based on the diary markings I would record some kind of demo as an audible diary marking. Then, with the demos I write more specific instructions that will become a composition at some point. And then I would finish it by reading research papers on the subject, putting the research into a context for myself.

This artistic research cycle is depicted in figure 1 below. It is not as strict as it sounds, though. During the research I jump between different tasks, and I let the whole process inspire me, and, in fact, it is the art that inspires the research as much as the research inspires the art.

Figure 1. My research process cycle.

I also have university education in engineering, and I have been involved in software projects. I’m highly interested in what technology, especially mobile technology, brings us in the future. I wish this to be part of my artistic identity and I want to make music using methods that are inspired by technology. In my opinion this is what guides the artistic experience fairly drastically. One of the possible paths for this master’s thesis was to develop a musical instrument app of my own. But then I realised there’s still so much uncharted territory in the existing iPad apps that I want to go there first. All the brilliant developers deserve their inventions to have use. Maybe the time for my own app comes later. In general, my background in technology and engineering practices is definitely one of the reasons why I’m interested in new innovations in interfaces and ways to create interesting soundscapes.

Another essential element in good research is criticality (ibid. p. 25). Scientific ideas should be compared with empirical results to test them systematically and omit erratic results. In this research, the concepts of performing live with an iPad are tested in practice and erratic perceptions are omitted in conclusion. For example, if I assume that I’m able to build a rhythmic song without syncing the tempo between different apps I clearly have to state whether it is possible or not. And if not, I need to find out another way to sync rhythmic elements between the apps. If that proves to be too difficult I clearly need to describe the process and state that the assumption is erratic.

How can the artist as a researcher maintain critical distance from the research process? Barrett and Bolt (2007, p. 140–141) have listed three things to be considered. Firstly, the researcher should locate himself in the field of theory and practice in the literature review. Secondly, the researcher should have clear methodological and conceptual framework where the researcher argues and demonstrates and uses terms like “I conclude”, “I suppose” as they relate to the hypothesis and design of the project. Thirdly, the researcher should discuss the work in relation to lived experience, other works, application of results obtained, contribution to discourse, new possibilities, obstacles encountered and the remaining problems to be addressed in future research.

I think these three points are covered in this research. The field of theory is discussed in the next chapter. For the second point I’ve adopted fairly personal research approach relating the theory to my own thinking . The third point is rather vast, but wherever applicable, I’m relating the research to my career in a larger scale. I think keeping a diary helps with the conclusions.

In addition, Barrett and Bolt (2007, p. 139) emphasize that the researcher should make a clear statement of the origin of ideas, reflecting them with current and previous projects: “The researcher should trace the genesis of ideas in his/her own works as well as the works/ideas of others and compare them and map the way they inter-relate and examine how earlier work has influenced development of current work and identify gap/contribution to knowledge/discourse made in the works. The researcher should also assess the work in terms of the way it has extended knowledge and how his/her own work as well as related work has been, or may be used and applied by others. “

There’s not much academic discourse on the topic of this research, but there is available a lot of material online produced by other iPad musicians. Sometimes, when the creative process is heavily based on intuition, it’s not possible to trace the influences, but if I can clearly state, where the influence comes from, I’ve added it in the diary I’m keeping.

Klein (2010, p. 4) argues that there is no real distinction between scientific and artistic research. Both aim at gaining broader knowledge within the field of the research. Artistic research can therefore also be scientific (Ladd 1979, in Klein 2010, p. 4). Also, artists argue that definition of science is somewhat ambiguous (Hannula et al, 2003, p. 10). I agree that this might be a good argument for those who criticize artistic research for its lack of scientific methods.

In this research I adopt the freedom of artistic research and engage in it without thinking how I should categorize the research in the academic world. After all, I have a research question and ways and means to find an answer to that. I think that will take me quite far.

Myself as a musician

My iPad musicianship is defined through my previous experience with the iPad and it is used as a point of reflection in this research. The goal of this section is to set expectations on what kind of music I’m going to make and what are my artistic choices in the music and what is my experience in using iPad as a live instrument. Experience, feelings and aesthetics are important concepts in that.

Being a musician often requires virtuosic handling of the instrument chosen. However, I don’t think I master any instrument so well that I could be hired to an orchestra to play parts from the sheet. I’ve never practised any instrument for an extensive span of time but somehow I’ve always had a drive to become a musician. Hour-long independent practice of fingerings and scales hasn’t appealed to me and I haven’t been able to define what kind of musicianship is my cup of tea. I started studying engineering, but I’ve always regarded music as my dearest pastime.

My growth as a musician has been on very ordinary lines. My parents made me play the piano when I was eight. After a few months I wanted to quit. My parents warned me: “If you quit, someday you’ll regret.” And how painfully right they were! Fortunately, a few years later, my good friend Juha asked me to start playing the bass, he wanted to play the guitar. That’s how it finally got started. We’ve been playing partners ever since and I’ve been able to call myself a musician.

Nowadays I tend to define my musicianship using the following five points of view.

- Sense of musical community and communication

- Emphasis on live performance

- Experimental pop as genre, with controversiality and surprises

- Physicality and honesty in live performance

- Playfulness

The first characteristic that defines me as a musician is the sense of musical community and communication. A few years after starting to play the bass I realized what was appealing to me in music, and what was in the core of my musicianship. It wasn’t just the fame and fortune of a potential rockstardom. It was those moments when I was playing together with the band, and we communicated through playing. We were even able to tell each other jokes by playing, and also conveying feelings by playing. It was a new experience to me. Improvisation played a significant role in this. I admire virtuosity and virtuoso players, but I don’t think virtuosity matters if the music that’s being played doesn’t suit to the social context, or if the music doesn’t deliver the feelings it’s supposed to do.

“If you want to make it to the top. Practice.” Maybe it wasn’t those exact words, but that’s how I remember a Sprite commercial from the 90s, telling you how amateur basketball players would make it to the NBA. What’s the NBA of musicians? Perhaps pondering over that has lead me to the situation where practicing hasn’t been exactly in the essence of my musical development. There are moments when I regret that. I enjoy practicing, seeing my development, but I often feel that I need accompaniment, I need other people to play with. I need band mates.

But as I’ve been growing older I’ve realised it’s more and more difficult to find common free time to practise regularly. So I needed to take another approach to find motivation to make myself practise. I found that in live performance. If there’s no band to play with, I need an audience to play for. That’s why live performance is such an important aspect for me. That makes me practise, and perhaps some day it will pay off and I notice I’ve become some sort of virtuoso myself. I enjoy spending time in studio and being absorbed in the sounds and music there, but – to me – the true essence of music is playing live. The second characteristic of my musicianship is emphasis on live performance.

The third thing that defines me as a musician is related to how I feel about musical genres. It may be said that currently my genre is electronic music, but I’m not leaning against the traditions of electronic music. I want to explore how electronic and computational means can be used to create music that defies genres.

In my personality there is one feature that prevails: I want to please other people. It usually means that I’m leaning towards pop music. However, I want to surprise people, do tricks, and that’s not usual in pop. Perhaps, in my family as a little brother, I’ve become the entertainer without the need to take responsibility. I can concentrate on doing tricks. Some of my idols in music, e.g. groups like Animal Collective, The Books and Battles, are quite experimental, but still maintaining something ‘pop’ in their music. I want to present contradictions.

The fourth aspect that defines me as a musician is the traits that I think make a good live performance. Those traits are physicality and honesty. I like it very much when a musical performance is a physical performance at the same time. I find live coding[4] a very interesting subject, but it lacks the physicality that is often tied to traditional instruments. I also think that laptops in general as live instruments lack the physical aspect, and I think iPad brings that idea back to computer music, without the need to tangle physical controllers.

Another aspect of good live performance is being honest to the audience. For example, it’s easy to cheat the audience so that a musical performance looks physically more demanding than it actually is, as is arguable in case of many EDM[5] live performances. What’s important is that the performer shouldn’t feel as if he/she is betraying the audience by making the musical performance look more complicated than it actually is. In short, I tend to think that playing music live requires some sort of special skill, technical or artistic. Live performance is about showing this skill to the audience. But if this skill is, e.g. merely a push of the play button, then I in the audience feel betrayed, and start thinking if the performer is doing it for the sake of art, or something else. That’s what I consider honesty in live performance. I think honesty is important in life, and I want to remember that also in my art.

The fifth aspect of my musicality is playfulness. I don’t avoid dark music or negative feelings, but my wish is that even in the darkest moment there’s a blinking light ahead that makes the listener smile. Perhaps my compositions are more often in major than in minor.

I believe that all these five things can be heard in this research, and they go well together with my music and the subject – iPad as a live instrument.

My experience as iPad musician

I’ve used iPad as an instrument in various gigs, mostly with my band Haruspex. It’s a duo consisting of Ava Grayson and me. We started with improvised noise music. It was a good approach for us, because we could just start playing without defining what we were actually playing. Ava plays her laptop and I play various iPad apps. Ava has created fantastic MAX[6] patches where she usually manipulates sound samples and creates new soundscapes from those. My role is to add another layer on top to what she’s playing. We’ve been really happy with what Haruspex has been doing and we have a plan to release an album in late 2016. Haruspex has been a really fruitful playground for me to play the iPad. It made my role as the iPad player easy because I wasn’t restricted to any particular musical quality or any particular app. I could choose any musical application and create sounds with it.

I like to consider iPad more as an acoustic instrument than a computer, so that I can give analog commands to the computer, instead of precise keyboard commands. In order to achieve that with an outcome that falls within specific limits (tempo, scale, chord progression), it usually requires making settings beforehand. It means a little bit of work but pays off later: when an instrument makes spontaneous changes possible in the music that I’m playing, it is also more expressive to me.

We also like to have a visual element in our performances. Sometimes we project the screen of the iPad for the audience, sometimes we project videos from laptop. Projecting the iPad screen works nicely with some apps, like Tachyon and Geo Synth and I’d like to use the iPad more for the visuals, too. The grand idea behind using images from the iPad is that I want to fight the idea that some people have: electronic musicians might just as well be checking their emails as performing music while playing their electronic instruments

That’s pretty much where Haruspex was left in summer 2015, and that’s where I’m continuing from with the practice part of this research.

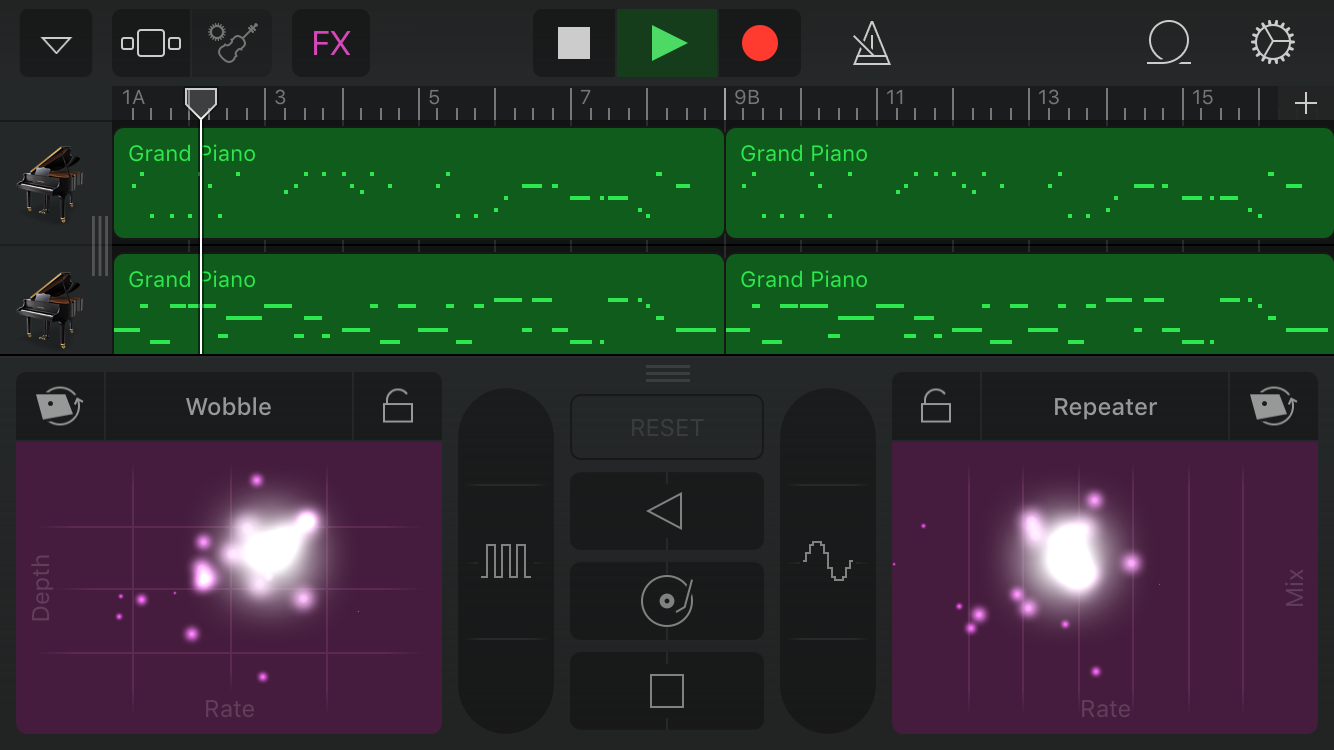

One important occasion for me as an iPad musician was the concert of iPad Orchestra in Sibelius Academy in Helsinki in late 2014. I like to think of it as an occasion where I and the players with me legitimised iPads as musical instruments. We played about 10 songs, a few classical compositions and some improvised music, both unaccompanied and accompanied by traditional instruments. My personal contribution was fairly modest, I played in two different songs. In the first one I wanted to showcase how the iPad can be used to approach live playing with a very low barrier. I played Bach’s ‘Air on a G String’ with Magic Piano[7], accompanied by a fellow iPad musician playing real cello. There was a great deal to be hoped for the nuances that the sound of Magic Piano provided, but nevertheless I was happy with the results. The other song was ‘Norwegian Wood’ by the Beatles. I invited all the members of iPad Orchestra to play in the song. I played acoustic guitar sounds from GarageBand[8] and sitar sound from SampleTank[9], using GeoSynth[10] as an interface.

The feedback we got was very positive. I was very happy how the arrangement of ‘Norwegian Wood’ worked together. The band of six iPads worked well. We didn’t sync the iPads but used iPads as if they had been traditional instruments. Apps that we used were GarageBand for guitars and electric piano, Animoog[11] for the bass and Impaktor[12] for the drums.

For a while there was a buzz about our gig but we haven’t played new gigs. I think the main reason is that it doesn’t make a big difference whether the songs we played are played with either more traditional instruments or with iPads. There’s just the novelty factor of using iPads, but after we showed to us, and to the world, that iPads can actually be used in the way we used them, the idea of playing more became rather boring. I think that we should find material for the set that is specifically related to iPads, not just any music or any songs. That’s one of the reasons why I’m doing this research, too.

In addition to Haruspex and iPad Orchestra I’ve used iPad in various more unofficial occasions, mostly at friends’ parties and as a DJ player. I’ve used it many times as a drum machine (mainly DM1 app) in live gigs. I think iPad functions as a very good drum machine, actually being more versatile than a hardware drum machine, because it can at the same time be used as (nearly) any other instrument, too.

Feelings

The expectations that I have towards this research are divided into two. On one hand there are the academic expectations where I confirm to the academic world that I’m able to handle the subject in a meaningful way and conduct artistic research. On the other hand there are my personal artistic expectations, which I’ve set quite high. Now that I’m devoting so much time and effort to a project, the outcome should be nearly perfect.

But it’s good to say it aloud and honestly: the outcome is probably not the ultimate masterpiece. However, it is something interesting, and the most important thing is that it is finished and hasn’t been forgotten in the digital drawer. It’s wise to get the research done and out in the world as soon as possible. The subject is rather new, and there exists fairly little research on it. But there is related activity going on all the time and eventually there will be research, too. The sooner I get this done, the bigger the possibility that other people find it interesting and even useful. My personal interest in the subject is to produce something that is unique, that makes clever use of emerging technology – and makes me produce interesting music.

I feel great about doing something that makes me a more productive musician. But it’s also contradictory. I often tend to think that music is most genuine, when it works as a whole – when I as a listener pay attention to the entire creation, not only to details and analyse how it’s been produced. Now, for the practice part of the research I act the opposite: I produce music through analysis. One of my goals is to expose the musical interface for the audience, and make them see how the music is created. It’s a little bit frightening. Will I become less interesting and too contemplative as a musician? But, on the other hand, only the audience, i.e. the viewers and listeners, can provide me with this insight.

Aesthetics

Personally, in this artistic research, the most significant result is the music that comes with the research. But how can I tell whether the music is good or not? In the context of the research it might not matter, but personally it’s very important. The aesthetics need to be validated by bigger audience. The aesthetic judgement makes an important contribution to the pragmatism of the research (Brown and Sorensen (2008, p. 161). However intimidating it is, I need to give the result to the general audience to judge, rate and review. If the end result is aesthetically pleasing to others too, it will keep me pushing forward and not leave the practice in this research as a one-time experiment. That’s also something worth striving for.

What are my aesthetic preferences besides experimental pop music in major? My style is a mixture of many sources. There isn’t one particular genre of music where my music would belong to. Perhaps the most significant feature is that I want to cross the borders of different genres – but breaking the boundaries in a kind way. My intention is not to shock like punk in the 70’s, or try to be progressive for the sake of progressiveness. I think the main goal is to inspire both feelings and thoughts. I want to tickle both sides of the human brain.

I’ve grown to listen and experience all kinds of sounds and music. I want to bring out best sides of different musical worlds that I know. If I’m patiently painting a long soundscape, at some point I want to reward the listener, and myself, with a hook. If I’m trying to construct a perfect pop song, I want to add experimental sounds, or use experimental methods to produce it. I want to compose electronic music that doesn’t sound like electronic music. I want to compose music that has an acoustic feel to it, but making use of the latest inventions in music technology.

I got very inspired by a talk given by a musician, composer and sound designer Tuomas Norvio in May 2015. He gave a speech in an event aimed at theatre sound designers and told about his methods and thoughts on sound design. His latest work had been sound design for dance and circus performances, but he has a background in the pop group Killer and in a pioneering Finnish electronic music group Rinneradio. I was inspired by the way he explained how he creates different forms using sound. With the forms he conveys the feelings that the director wants to convey. The most important thing he’s realized is that those forms don’t need to fit in any pattern or scale or genre. The sounds need to act as messengers, and he tries to deliver messages that he thinks are effective but also aesthetically pleasing. I thought his words were very wise and I could easily share his views.

Theoretical background

The theoretical background provides understanding of all the things that previous researchers have discovered. In this chapter I go through what musical can be done with a computer, or with a computer full of sensors. What are the aspects that should be taken into consideration when designing a new digital instrument? Not all the points covered in this chapter show up in the practice section, but at least they point the readers to investigate more, if some of the fields are left for too little attention.

The first part of the theoretical background comes from the field of electronic music, or more precisely computer music. The distinction of electronic and computer music nowadays is not that clear, because in principle nearly all the aspects that once were essential to electronic music can be achieved in the digital domain using computers.

The second part of the theoretical background is in NIME. There has already been done some research on using touch screen, different sensors and iPad as a musical instrument in the NIME community.

Computers in music

The point of this section is to explain that music in the context of this research should not be regarded as Computer Music, or Electronic Music, or anything special, just music. This idea is communicated by going through how computer music has been evolving over the past 50+ years, and how gradually computers have become more and more responsive and interactive. Nowadays computers are just one way to make music, and a rather important one. Music created with computers doesn’t necessarily have to sound like computer music as we normally think of computer music.

“After humans started making music with something else than our voice and heartbeat, we incorporated machines of sorts in our music. And computers are just one example in that process.” (Cox & Warner 2004, p. 113) I think this quote summarizes how I feel about music technology today. I feel that computers are not something separate from other means of making music. Computer can be used for creating music, just like any other more traditional musical instrument. On the other hand, computer as a musical instrument is more powerful than a traditional acoustic instrument because computers can take and obey orders, and repeat them as long as wanted, while the player can do something else, or add something on top of what’s already being played. Bongers (2007, p. 9) says "The essence of a computer is that it can change function under the influence of its programming." They do exactly and literally what we tell them to do, without the ability to interpret what we might be meaning. Computers are also very strict in how they take in the input. Traditionally we manipulate computers with such devices as keyboards where a key is binary: it’s either pressed or not, there hasn’t been any middle ground in that. I believe that this has had an influence on how computers have been used as musical instruments.

However, the use of touch screen and sensors in giving input to the computer is gradually changing how computers are used as musical instruments. Already, the addition of the touch screen to computer is a step for computers to become better instruments; they can be manipulated with ten fingers. Different sensors provide even more analog-like[13] means to give orders to the computer which makes the computer a more expressive instrument.

It’s likely that as soon as we figure out better ways to give (more ambiguous) orders to computers, and we are able to teach a computer to interpret the player better, computers will be even better instruments. But that may require a bit more intelligence than the computers have today.

There’s been a time, when I’ve personally disregarded electronic music as not proper music, not being for my taste. I’ve been thinking that there’s little life in a steady electronic beat and basicly no groove. Recently I’ve changed my mind. I think it’s mainly because enough time has passed and computers have invaded the music production world so thoroughly. I’ve been exposed to so many groovy electronic music examples that it has changed my mind. I think that also a combination of a drum machine and a real drummer produces groovy results. In one way or another computers are part of nearly every commercial music production, if not as instruments, then in the recording or post-production phase. Anything you can do in an analog studio and with analog instruments, you can do with computers, and even more. And this is constantly evolving.

I don’t think there’s a special need to talk about computer music as such, unless something specific is meant by that. Computers are used in music, but not necessarily for computer music. However, it’s still important to define that this is a research on digital electronic instruments.

A musical instrument is an object that has been created with the intention of producing musical sounds. Bongers (2007, p. 9) lists different types of musical instruments:

- Passive Mechanical (e.g. flute and piano)

- Active Mechanical (e.g. organ)

- Electric (e.g. electric guitar)

- Analog Electronic (e.g. analog synthesizer)

- Digital Electronic (e.g. digital synthesizer and computer)

Computers are digital electronic instruments. According to Bongers (2007, p. 9) digital electronic instruments play an important role in the development of instruments: "Although there have been programmable mechanical systems and analogue electronic computers, the digital computer has had the biggest impact on society and therefore forms a separate category." This doesn’t mean that computers have to be kept separated from other instruments. Just as we mix string instruments with percussive instruments, we can mix digital instruments with some other instruments. And taking the idea even further, the power of digital instruments is that they can mimic the sounds of all the other groups, and go beyond and provide sounds that no other instrument does (Bongers 2007, p. 10).

However, I think the distinction between electric and electronic is important. The evolution from electronic instruments to digital and computer-based instruments has provided freedom for the design of the interface. Players’ interaction with traditional instruments is often tied to the physical qualities of the instrument whereas in digital instruments the sound engine is usually separated from the playing interface. In digital domain, the playing interface can be designed separately, and it’s rather easy to try out different interfaces and come up with the most suitable for the situation. In the context of this research a digital electronic instrument is any computer program that has can be used for producing musical sounds.

One issue that is often underlined in computer music is how the audience feel about experiencing it in a live situation, mainly because the sound source, or the action that triggers the sound, is not visible to the audience. It can cause mixed feelings. One might argue that the listener is very rarely interested in how music is produced or made. I would say, that in live music it makes a difference, at least in terms of expressivity. If the music comes to life via a complex but elegant computer algorithm, but the audience cannot see it, the impact remains small.

One approach to tackle this is to take away the performance and musicians from the live concert and offer acousmatic[14] concert experience without any intention to give visual representation of the music, only loudspeakers. Another approach, my approach, also, is to try to make the computer screen visual and show the audience what kind of interaction is taking place. This can be done, for example, by projecting the screen of the computer to the audience. Collins (2003) puts it bluntly: “How we could readily distinguish an artist performing with powerful software like SuperCollider[15] of Pure Data[16] from someone checking their email whilst DJ’ing with iTunes?”

Brief history of computer music

Computers have been making intentional noise since 1947 (Elsea 1996). The start of incorporating computers in music was slow but now, almost 70 years later, computers are used for many tasks in music making. Probably even the most purely acoustic recording is produced with some computing power involved at some phase of the production. In a live set the most common place to see powerful computing is situated at the table next to the performer, in a form of a laptop, usually with a bunch of musical controllers to trigger the sounds and the music.

The earliest programming environment for sound synthesis, called MUSIC, appeared in 1957 and was written by Max Mathews at AT&T Bell Laboratories (Wang 2007, p. 58). The same year Illiac Suite – the first complete computer composition – was created, using computer algorithms (Essl 2007, p. 112). After Mathews’ initial contribution the main development was done in various musical research centers, such as M.I.T., The University of Illinois at Champaigne-Urbana, The University of California at San Diego, The Center for Computer Research in Music and Acoustics (CCRMA) at Stanford University, and the Institut de Recherche et Coordination Acoustic/Music in Paris (IRCAM) (Elsea 1996). Research at these centers was aimed at producing both hardware and software (ibid.). For a decade, the sound synthesis software (and punch cards) were coupled with the particular hardware platform it was implemented on, but in 1968 the computer music programming was implemented in high-level general purpose programming language called FORTRAN and could be ported to any computer system that ran FORTRAN (Wang 2007, p. 60).

In the early days of computer music computers were treated separately from other instruments, even from electronic ones. Since then, computers and electronics have been getting closer to each other, electronic instruments such as synthesizers have become more and more digital. The convergence of computers and other instruments started already in the 60s. However, only in the late 70s composers started to get involved in the computer music scene. The use of computers for composing was difficult due to the location of computers in the research centers and the process of getting your compositions to audible form was very time consuming. For the composers who were interested in the new sounds and new possibilities the analog synthesizer provided faster results than computers. This led to a situation where the full advantage of computers was not utilized and computers were not used for sound source but to control analog synthesizers. This led also to the development of sequencers. (Elsea 1996)

Finally it was the development of microprocessor in the 70s that meant that computers controlling the synthesizers were accessible to composers and musicians that were not involved in the network of research institutions. In 1981 a consortium of musical instrument manufacturers began talks that led to the MIDI[17] standard in 1983. This made it possible to connect any computer to any synthesizer. Since then the music stores have become stuffed with MIDI devices of all sorts, and the hybrid system is nowadays the norm. (ibid.)

According to Elsea (ibid.) the coming of MIDI had little effect on the computer music research institutions, because MIDI was primarily used where quick and simple connections were needed. Research centers were interested in other things, for example developing better ways to give orders to the computer such as Csound (ibid.). MIDI had greatest impact on the commercial sector, not only because of the connectivity but also because of the price. Yamaha DX-7, the first programmable digital music synthesizer, equipped with MIDI, was priced two thousand dollars, whereas the previous generation machine capable of music synthesis, PDP-11, cost a hundred thousand dollars (Schedel 2007, p. 29). It meant that electronic and computer music gradually found their way also to the musicians on the street.

Different ways to use computers in music

Computers are used in two different tasks in music: 1) electronic methods of producing sound and music and 2) computational methods of making music (e.g. Collins & d’Escrivan 2007). The boundary between those two approaches is getting narrower all the time, since there are DAWs[18] that are mainly used for recording and sequencing but at the same time they include several ways to create and manipulate sounds, and use computational methods for creating music.

Taking the idea a bit further, computers are used for five different tasks (ibid.)

- traditional sequencing and multi-track recording

- sound synthesis (and effects)

- creating algorithmic processes

- music research (to study properties of sound or rules embedded in musical aesthetics)

- creating digital instruments and augmenting existing instruments, i.e. hyperinstruments

In the context of this research tasks 1, 2, 3 and 5 are most important. In this chapter I mostly concentrate on computer as a tool for creating algorithmic processes; that’s where the real novelty lies in using computer as a musical instrument. The fifth task, creating digital instruments and adding computational power to existing instruments, is discussed in the next chapter in more detail.

For the first task – traditional sequencing and multi-track recording – it’s quite clear to see the advantages of a computer over traditional analog tapes for recording and sequencing. Since everything is done digitally, there are simply no physical tapes to store and manipulate. The scalability in the digital domain is so much bigger. All the cutting and pasting activities and looping have become significantly easier with digital recording studios, not to mention the power of ‘undo’.

The following two tasks are about algorithms. Algorithm means a sequence of instructions for solving a specific problem in a limited number of steps. (Essl 2007 p. 107). Every algorithm can be translated to a computer program, and computers are usually, if not better, at least many times faster to process algorithms than humans. Basically digital sound synthesis and effects are also algorithms, but I treat them separately, because synthesis and effects exist in the analog domain, too. Computers are very good tools for sound synthesis and effects. Digital signal processing (DSP) is the method in which sounds are constructed sample by sample to form audible sounds. Effects are applied using the same principle: taking in one sample at a time and calculating a new value for it, according to the effect algorithm. Computers can be used to do very precise manipulations, but also a lot of computing power is required. A second of a CD quality audio is constructed of 44 100 samples. In practice it means that the computer needs to process over 44 000 samples every second. This hasn’t been a big task for computers for a long time, though. But for complicated effect calculations even modern processors may be using their full capacity.

The basic pattern how a digital instrument works is that there’s an input by a human player or from another musical application. Then there’s processing according to the parameters of the input and then output. It’s a simple pattern, but the advantage of digital instruments lie in the fact that these simple elements can be highly complex. Input doesn’t necessarily have to be a human player, or there may be several inputs to the same system. Process can be defined and programmed to the finest detail by the instrument maker, and it can be highly complex, too.

Figure 2. How all digital instruments work: input, process, output.

The third task – algorithmical processes – are very much a product of digital domain. There are many ways in which computers can be programmed, many ways to create algorithmic processes. They can play patterns on their own, as accompaniment, or analyze music and play music according to that. Or computers can be used as generative music machines, playing constantly evolving music on their own. Or simply, computers can be used to give random values and thus automatic variation to the musical process.

The fourth task – music research – relates to the fact that synthesis can be seen as the opposite of analysis. If something can be constructed, then it can also be deconstructed, or analysed and computers are used for analysis. There are many aspects that can be analysed in music, starting from the contents of soundwave, like pitch, tone colour, tempo, harmony. But computers can also analyze the contents of music in macro level, such as repeating patterns and musical style. Many of the analysis techniques are also used for effects and sound manipulation, tasks that were not possible using analog electronic equipment.

The fifth task – digital instrument building – is about how computational power is used for building and augmenting instruments; how the aforementioned ways of using computers for music making take place in instruments intended for live playing.

Let the instrument play

Computers in music have made possible new kinds of composition methods at the same time that they have caused disruption in the social and cultural practice of music making (Rowe, 1993). I think what Rowe says is true for many disrupting practices. Music would have survived just fine without the introduction of computers. But their appeal was so strong that researchers wanted to keep using and researching them. Where there’s something new and emerging there’s also conservatism which causes resistance.

One example of new composition techniques is interactive music systems, or interactive instruments. The definition of interactive instruments by Chadabe (1997, p. 291) says: "These instruments were interactive in the same sense that performer and instrument were mutually influential. The performer was influenced by the music produced by the instrument, and the instrument was influenced by the performer’s controls." Simply put, there’s an algorithm in a computer that causes it to change its behaviour based on the input of the player. Interactive music systems may create a shared creative aspect of the process in which the computer influences the performer as much as the performer influences the computer (Drummond 2009, p. 125).

According to Rowe (1993) algorithmic composers explore some highly specific techniques of composition at the same time that they create a novel and engaging form of interaction between humans and computers. Such responsiveness allows these systems to participate in live performances of both notated and improvised music (ibid).

Chadabe (1997, p. 291) writes about interactive instruments: "musical outcome from these interactive composing instruments was a result of the shared control of both the performer and the instrument’s programming, the interaction between the two creating the final musical response." Drummond (2009, p. 125) says that interactive computer music systems such as Chadabe explains challenge the traditional clearly delineated western art-music roles of instrument, composer and performer. And there’s no reason why a computer musician couldn’t be mixing interactive musical systems with other instruments, which have simpler relationship between input and output.

Drummond (2009, p. 124) says that "an interactive system has the potential for variation and unpredictability in its response, and depending on the context may well be considered more in terms of a composition or structured improvisation rather than an instrument." It’s difficult to define the borders between interactive instruments (or systems) and structured improvisation compositions.

Another field where computers provide advantage over other means of making music is generative music. Generative music is a term popularised by Brian Eno, referring to music that is ever-different and changing, and that is created by a system. “[A]ll of my ambient music I should say, really was based on that kind of principle, on the idea that it's possible to think of a system or a set of rules which once set in motion will create music for you.” (Eno, 1996). Computer is good system for setting the rules and create ever-changing music.

I think interactive systems, also systems producing generative music, are interesting and growingly important for me as a solo musician. I’m building a solo set where I want to create a rich soundscape, and play multiple sounds and timbres at the same time. I think that such apps that work as interactive music systems could be helpful. Instead of preparing extensive amount of samples and looping I could use ‘smart’ applications, music systems that interpret my playing and response to that. Or instead of having full control over what’s playing I could give a generative music system some power and let that guide me.

Programming your own music

In the course of evolution of using computers for music making the focus has turned from getting music out of a computer to making the computer to interact with music. There are many different ways to give orders to the computer and many different interfaces to do so.

There are thousands of software instruments with rich user interfaces that can be played directly without the need to program them. But if the ready-made apps don’t provide with what the musician is striving for, it’s also possible to create your own musical program using dedicated environments for that. There are musical programming environments with graphical user interface (eg. MAX and Pure Data) and text-based interface (eg. Csound and SuperCollider). Graphical interface presents the data flow directly, in what-you-see-is-what-you-get kind of way, whereas the text-based systems don’t have this representation. Understanding the syntax and semantics is required to make sense of the text-based systems. However, many tasks such as specifying complex logical behaviour are more easily expressed in text-based code. (Wang 2007, p. 67)

Text is a powerful and compact way of giving orders. Text-based systems are usually used for run-time modification of programs to make music (ibid.). If this happens in a live situation the action is often called live coding (ibid.). Live coding music means creating a musical performance with a computer whose screen is projected for the audience to see. Live coders make use of audio synthesis and manipulations capabilities of the musical programming environments they are using.

Some musical programming environments (such as SuperCollider) enable also networked music, which means that the musicians don’t have to be physically in the same place. Nearly every computer is connected to some network. It means that musicians are able to make use of the network to communicate with other musicians or audience, and they don’t have to be physically in the same place. This is quite a big topic in computer music but not discussed more extensively in this research.

One method that can be used in live coding and also in composing or creating interactive music systems is algorithmic composition. By using algorithmic methods such as automatisms, random operations, rule-based systems and autopoetic strategies, some artistic decisions are partly delegated to an external instance (Essl 2007, p. 108). This can be regarded as giving out the artistic freedom, but on the other hand it enables the artist to gain new dimensions that expand investigation beyond a limited personal horizon (ibid.). Algorithms can be regarded as powerful means to extend our experience – algorithms might even develop into something that may be seen as ‘inspiration machine’ (ibid.). It’s possible to form algorithms with both graphical and text-based interfaces but in text-form they are often very compact and more easily understandable. The use of algorithms is not solely restricted to computers, but computers are very good tools for algorithmic approach. Due to its rule-based nature, every algorithm can be expressed as a computer program (ibid.).

It’s easy to define computer music as something separate from music played with acoustic instruments. In some extreme cases like live coding it may seem so very distant practice. My view is different, which is reflected by the following quote: “Music has always inhabited the space between nature and technology, intuition and artifice” (Cox & Warner 2004, p. 113). According to Cox and Warner (ibid.) machines are no less important in the evolution of music than human heartbeat and voice. Also acoustic instruments can be regarded as mechanical machines of sorts. Following the same idea we can think of a symphony orchestra as a machine, too, conductor being the player of the musical machine. Actually, at least in theory it would be possible to construct a symphonic orchestra out of computers. Computers are well capable of replicating the sounds of acoustic instruments used in a symphonic orchestra. If the computers were equipped with proper sensors to follow the conductor a symphony orchestra made of computers playing the corresponding instrument sounds, it could be conducted in the similar way as a traditional symphony orchestra consisting of human players.

There are all kinds of wild ideas about the role of computers in music in the future. It’s pretty evident that computers are and going to be a stable part of the recording studio. It’s not clear, however, how computers are going to be used in live gigs in the future. There are many ways to use them and I think the computer musicians have only scratched the surface of their capacity. During the past decades computers have become more and more portable with various methods to interact with them. Making music with computer can be regarded as its own genre. However, I don’t want to make a big distinction between music that is produced with computer and music produced with some other instrument, whether it is a saxophone, a piano, or even a symphony orchestra.

New Interfaces for Musical Expression (NIME)

The goal of this section is to find relevant subjects in the field of NIME that can be used later in the definition of my practice and then in the analysis section as reflection points. After every NIME conference, the research papers are made public for researchers of the world to study. It’s easy to see what have been the topics of the each year. In ‘proceedings’, research papers of each conference, the topics of touch screen, tablets and mobile music have been covered in recent years. It’s a nice source of research and inspiration for this research.

The second part of a theoretical background comes from New Interfaces for Musical Expression (NIME). Here’s what’s been said about NIME in their own website[19]: “The International Conference on New Interfaces for Musical Expression gathers researchers and musicians from all over the world to share their knowledge and late-breaking work on new musical interface design. The conference started out as a workshop at the Conference on Human Factors in Computing Systems (CHI) in 2001. Since then, an annual series of international conferences have been held around the world, hosted by research groups dedicated to interface design, human-computer interaction, and computer music.”

NIME is both a yearly conference and a field of research. The topics of NIME range from augmented interfaces for traditional instruments to touch screen interfaces as musical input methods to playing music using gesture recognition without any interface at all – and everything in between. NIME could perhaps exist without computers, but as the research is mainly about digital electronic instruments, so usually there’s a computer involved.

The research around the the topics of NIME took its first steps at the same time as digital instruments started to become popular. According to Jordà (2007, p. 97) the interest towards alternative music controllers started to grow with the advent of MIDI. The role of MIDI was important in this: it standardised the separation between input (control) and output (sound) of electronic music devices. After MIDI, in the late 1990’s the introduction of OSC[20] provided even more possibilities for the players and makers of experimental musical interfaces to interact with the instrument (Phillips 2008).

NIME covers concepts of human–computer Interaction for musical instruments. There are different ways how the researchers approach the topic, but the main idea is to explore how a player can give orders to computers to play music in a precise but rich way; and how the response of a computer can be sent back to the player. Miranda and Wanderley (2006) propose a model for digital musical instrument where the instrument contains a “control surface” and a “sound generation unit” conceived as independent modules related to each other by mapping strategies (the arrows between the boxes in figure 3). The model that Miranda and Wanderley suggest is depicted in figure 3 where the main components, gestural controller and sound production, are what could be in the ‘Process’ box in figure 2 (see page 19). Miranda and Wanderley emphasize different forms of feedback from digital musical instruments: primary (tactile and visual) and secondary (audible) feedback.

Figure 3. Approach to represent a digital musical instrument (Miranda & Wanderley 2006)

Sensors play an essential role in many of the NIME research topics and that’s how gestures are fed into digital musical instruments. There are many types of sensors that can be used in musical instruments, such as distance, flex and pressure sensors. Sensors watch the real world actions of a player and transmits them to a computer. Some of the sensors are able to determine gestures out-of-the box, but gesture recognition patterns can also be programmed to the computer.

One important concept in NIME is mapping, that is the connection between gestural parameters (input) and sound control parameters or audible results (output) (Jordá 2004, p. 327). The most direct kind of mapping, which associates each single sound control parameter (e.g., pitch, amplitude, etc.) with an independent control dimension, has proved to be musically unsatisfying, exhibiting a toy-like characteristic that does not allow for the development of virtuosity. More complex mappings, which, depending on the type of relation between inputs and synthesis parameters, are usually classified as one-to-many, many-to-one or many-to-many, have proven to be more musically useful and interesting (Hunt & Kirk, 2000, p. 251).

Figure 4. Different kinds of mappings. Image by Valtteri Wikström (SOPI 2015).

One-to-one mapping maps directly one input to one output and as a mathematical function they take the form y(x). One-to-one mapping is usually about scaling and transforming data. One-to-many mapping is about using limited controls for a more complex system but it’s mathematically similar to the one-to-one mapping. One-to-many mapping can create conceptual difficulties for the interface, though. Sometimes it makes sense to control a single output device with many inputs, which is called many-to-one mapping. Their mathematical functions take the form y(x1,x2,x3,…,xn). In the most complex case, many-to-many mapping, the designer needs to think conceptually about the relationship between outputs as well as inputs. Mathematically many-to-many mapping is similar to the many-to-one mapping. (SOPI 2015)

Interfaces

The interfaces of digital musical instruments are free from many physical constraints. A basic touch screen can be configured to have two dimensions, X and Y axes, whereas a piano has only X axis. It has one-dimensional playing interface: going from left to right the pitch grows. In addition, the amplitude of a piano note can be controlled by pressing the key in different position, which can be regarded as very limited Y axis. In comparison, an XY pad of a touch interface can be configured to send pitch data according the touch point of the X axis and amplitude data according the touch point of the Y axis. Thus the player is able to alter the sound freely in both X and Y axis at the same time.

I see touch screen interfaces as seamless continuation to the evolution of electronic music. I attended Bob Ostertag ‘s lecture in festival of digital art Resonate ‘15[21] in April 2015. Bob Ostertag is an experimental sound artist and writer who has lived through the evolution of synthesizers. He’s been making music with synthesizers and experimenting with different playing interfaces. He gave a very thought-provoking speech.

“Back in the 70’s with the early synthesizers there was a debate about the keyboards on the synthesizer. So Robert Moog had a keyboard on his synthesizer [...] and made a lot of money. Don Buchla did not put keyboards in his synthesizers. [..] My side of the debate said ‘why would you put a keyboard on these things?’ [...] We already have pianos, we already have organs that work really well. So, let’s do something new. And at the time all we had was knobs. So, we imagine that in the future there would be these new machine-human interfaces that were incredible – that will allow us to control synthesizers in a way that was smart, idiomatic to the medium. And over the last 40 years I’ve experimented with almost every interface that’s been proposed, and I think they all fail [...]. They all fail in a sense that there’s no human-machine interface that would inspire you to practice six hours per day for 20 years to become a virtuoso with like a violin that would inspire you, or like an oboe would inspire you.“

After the speech he performed one of his early compositions for analog synthesizers, that he had reconstructed in MAX and using an iPad as an interface to perform. So, even though in his opinion also iPad fails as an interface for controlling the synthesizer at least he believed that, in 2015, iPad was good and interesting enough for him to control the synth..

“Modern improvements to user-interfaces allow one musician to play a larger, more complex and intricate repertoire.“ is how Mann (2007, p. 2) describes the possibilities of digital instruments and interfaces. It’s also a positive prognosis where the development of using computers as instruments and coming up with new interfaces to play them is leading to. It’s an ongoing development that started before computers and electronics: “The harpsichord or piano can be used to play very richly intricate compositions that a single musician would not be able to play on a harp. Similarly, an organist is often said to be ‘conducting’ a whole ‘orchestra’ of organ pipes.” (ibid.)

Mann (ibid.) continues by comparing earlier automated instruments with electronic instruments: “Some instruments, such as orchestrons, player-pianos, barrel organs, and electronic keyboards can even play themselves, in whole or in part (i.e. partially automated music for a musician to play along with). For example, on many modern keyboard instruments a musician can select a ‘SONG’, ‘STYLE’, and ‘VOICE’, set up a drum beat, start up an arpeggiator, and press only a small number of keys to get a relatively full sound that would have required a whole orchestra back in the old days before we had modern layers of abstraction between our user-interfaces and our sound-producing media.“ Similarly, a single computer can be used to conduct multiple sound sources, and a touch screen is one approach to allow non-discrete input. We are gradually able to perform more complex tasks with less effort using computers. "What can be seen in this historical development is a decrease in visibility: everything becomes smaller and less tangible, while at the same time complexity increases. This contradiction urges developers to pay more attention to the design of the interface. A whole field of research and design has emerged in the last few decades, offering us methodological and structured approaches in human-computer interaction." (Bongers 2007, p. 9)

When new interfaces are developed, there are many choices to be made. The interface should be powerful, and not hide the features. It should be simple, but provide easy access to all of its features. Touch screens are just the beginning. There are many interesting musical interfaces to come. For example Apple has plans for creating touch screens that provide tactile feedback. Tactile feedback could be used for telling how the fingers are situated on a touch screen without the need to look at them.

The E in NIME

Humans have feelings, computers don’t. Humans can interpret feelings while computers don’t. This leads to a very important concept in musical performance: expression. Expression is the act of conveying feeling in a work of art or in the performance of a piece of music[22]. Dobrian and Koppelman (2006) have studied expression in new musical interfaces: how to enable expression with instruments that are not traditional in nature, moreover digital instruments which involve computers as sound source.

Traditional instruments come with different options for expression. Digital instruments usually don’t have such qualities if they are not specifically designed into the instrument. Digital musical interfaces should provide ways for the musician to express feelings; musicians should be able to alter the music according to current emotions and audience reactions[23]. Poepel (2005, p. 228) lists different elements of expressions that work on a note level: tempo, sound level, timing, intonation, articulation, timbre, vibrato, tone attacks, tone decays and pauses. Then there are expressive aspects on a phrase level such as rubato and crescendo (ibid.)

A good musical instrument is expressive, in such a way that the player has means to deliver the music to the audience in a desired way. According to Dobrian and Koppelman (2006, p. 278) control enables expression but a controllable instrument isn’t necessarily expressive. Does the expressiveness of digital instruments reach the level of traditional acoustic instruments? Basically all the elements of expression could exist in digital instruments, but they need to be designed and programmed into the instrument separately, in a process where there are always many decisions and compromises to make. Dobrian and Koppelman (2006, p. 278) say that one-to-one mappings are good for precise control but one-to-many mappings and gesture-sound relationships bring better expressive qualities, when they are well designed. Designing them well means a lot of work, and that work should be done together with the players. In traditional acoustic instrument the qualities of expressiveness exist naturally (ibid.). Dobrian and Koppelman (2006, p. 279) say that expression is a product of musical training, something that keeps professional musician interested in the instrument.

What if the player is not a human being but a computer itself? Can a computer be expressive? In my opinion, currently computers are not very good at being expressive and all the expressiveness needs to programmed into the composition. Humans are far more interesting players than computers. If a computer is replaced with a human being, a very important factor, human inaccuracy, is neglected. Inaccuracy and slight mistakes often bring life to music. For me as an artist it’s interesting when human players make use of the computational power of the computer, ability to synthesize interesting sounds, analyze musical content and play simultaneous processes at the same time and use algorithmic patterns in a way that a human player with a single traditional instrument wouldn’t necessarily be able to play. In that kind of approach the inaccuracy and slight mistakes exist in a different form.

It’s also possible to augment existing instruments. According to Bongers (2007, p. 14) adding electronic elements (sensors and interfaces) to the instrument leads to hybrid instruments or hyperinstruments[24]. Bongers (ibid.) continues: “With these hybrid instruments the possibilities of electronic media can be explored while the instrumentalist can still apply the proficiency acquired after many years of training." This is probably one of the reasons why so many interfaces of musical applications take the form of an existing instrument. On one hand it's easy for an existing virtuoso to start playing the new instrument with acquired skills. On the other hand it may be laziness of the instrument designer. If it's a new instrument with different features, why the interface should be the same? Or even worse, why to resemble existing interface if it's not suitable for the medium, e.g. a touch screen? Fortunately there’s existing research on this topic. For example Anderson et al (2015) claim that using major third intervals for instrument layout in a touch screen instrument instead of the usual 4th-interval tuning can be more easily learned by new users without prior musical experience. So, combining aspects of traditional instruments with new digital instruments can lead to a more gradual learning curve, and perhaps even richer experience for the player.

There’s one major flaw in touch screens in particular as a musical interface, but also in many other digital instrument interfaces: the lack of tactile (or haptic) feedback. Tactile feedback from the instrument to the player is essential so that the player knows how the fingers (or any body part that is used for musical input) are situated on the playing area. Arguably for virtuoso players of traditional instruments tactile feedback is the most important factor, because it’s possible to analyze the outcome prior to playing a sound. “Acoustic instruments typically provide such feedback inherently: for example, the vibrations of a violin string provide feedback to the performer via his or her finger(s) about its current performance state, separate to the pitch and timbral feedback the performer receives acoustically.” (Drummond 2009, p. 130) This also means that the player doesn’t need to pay attention to the results but may sense the outcome beforehand: "With electronic instruments, due to the decoupling of the sound source and control surface, the tactual feedback has to be explicitly built in and designed to address the sense of touch. It is an important source of information about the sound, often sensed at the point where the process is being manipulated (at the fingertips or lips). This immediate feedback supports the articulation of the sound.” (Bongers 2007, p. 15)

We are approaching the future where digital systems are able to provide useful tactile feedback. But the time is not quite here yet . Papetti et al (2015) think that touch screens are not able to provide meaningful feedback for the player: "The use of multi-touch surfaces in music started some years ago with the JazzMutant Lemur touchscreen controller and the reacTable, and the trend is now exploding with iPads and other tablets. While the possibility to design custom GUIs has opened to great flexibility in live electronics and interactive installations, such devices still cannot convey a rich haptic experience to the performer." The examples in Papetti’s list have the quality that Bongers mentioned: they lack the possibility to articulate the sound before the sound is actually heard.

Instrument vs. controller